PilotWatch

by John Robinson @johnrobinsn

Recently I've wanted to experiment more with open code-generation models such as CodeGen, SantaCoder, Replit and most recently StarCoder. But the bar for comparison is Github's Copilot, so I decided to write a tool to help me dig more into how Copilot works.

PilotWatch is a tool for intercepting and logging live calls from the VSCode Copilot plugin to the cloud-based Copilot backend without patching the plugin itself. I wrote this to get a deeper understanding into how the Copilot plugin "engineers" its prompts for code completion using the context and state from the VSCode environment.

Other potential uses are to understand what information is being transmitted to Github when you use their service and also to collect small datasets for evaluating the captured code generation prompts against other models or perhaps for finetuning other code generation models.

PilotWatch takes the form of a logging proxy server (written in node.js) that sits in the middle. After running it you just configure the Copilot plugin to forward its traffic to the PilotWatch proxy running on your local machine. PilotWatch will in-turn forward this request directly to Github's backend systems and when it receives the results will transmit those back to the Copilot plugin; logging all interactions to your local file system.

PilotWatch also includes a simple web interface for viewing the code completion logs interactively with syntax highlighting etc.

Installation #

You can refer to the README for instructions on how to run PilotWatch and how to set it up with VSCode.

Prompt Engineering #

One of the reasons that Copilot works as well as it does is it doesn't just provide the last couple of lines that you've typed as the prompt for code generation. Rather the Copilot plugin includes a fair bit of additional context in the prompt. This additional context allows Copilot to correctly "guess" functions and variables from surrounding code - including from other files in the current project. This context is generated dynamically by the client-side Copilot plugin which has fairly complicated logic to prioritize what bits of information to include in the somewhat limited 2k context window of the Codex LLM used by Copilot.

The Copilot plugin not only provides information about the text near the cursor location within the current document. But can also provide information from the most recently accessed 20 files of the same language as the current file. The code completion request can also include a suffix which is a trailing prompt so that the Codex model can "fill in the middle".

Parth Thakkar does a deep dive on the inner workings of the Copilot plugin here. Parth's article goes into a lot of detail and is a great reference.

Prompt Example #

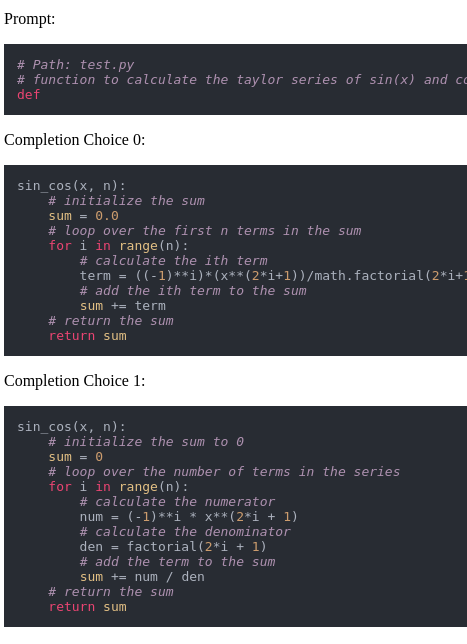

Here is a screenshot of PilotWatch rendering a single Copilot code generation request. You can see the prompt and a couple of responses returned from the Copilot backend.

Click Here to see the full code completion report.

Every code completion is captured into a JSON file with the following format.

Conclusion #

I wrote this little tool to aid my investigation into understanding how Copilot works and how it works so well. I hope you find it useful too.

Please like my content on Twitter if you enjoyed this article/project.

Share on Twitter | Discuss on Twitter

John Robinson © 2022-2025