UFO Lands on Highway! Or Depth Estimation using ML

by John Robinson @johnrobinsn

In this article, I'm going to talk a bit about deep learning models that allow you to estimate per pixel depth from images, a bit about UFOs and a bit about 3D visualization of depth maps. I also provide some code to let you try it out for yourself.

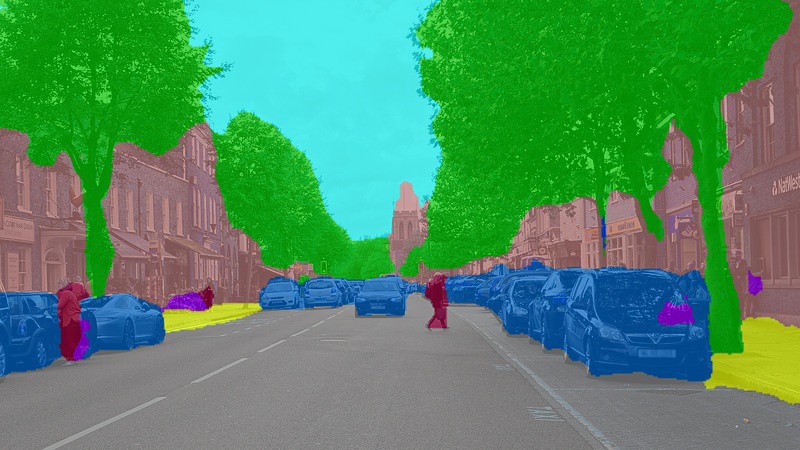

Convolutional Neural Networks (CNNs) and Supervised Learning have proven to be a powerful combination when it comes to solving classification problems. Networks are trained using a large number of labeled images for some fixed number of classes such as a dog or a cat etc. These models learn to identify visual features/patterns within the pixels of the image to determine whether it is a dog or a cat. These methods can be extended so that the model can not only give a single classifcation for the entire image but can instead provide a per pixel classification. In other words it can classify each pixel as belonging to one of a number of fixed class labels. These models are known as segmentation models. Here is an example where a segmentation model has been used to color code the pixels of an image, tagging trees with green and cars with blue etc.

But can similar models be used to learn other types of object features beyond class labels? What about estimating the per pixel depth (ie distance from camera) of everything in the image. Most people use both of their eyes to see the world. But it is your brain that does most of the work of perceiving a 3D world with depth. Even if you close one eye your brain has learned to use monocular cues such as edges, gradients, shape, lighting, shadows etc to form a pretty good understanding of the 3D world even without the benefits of binocular vision. So can a CNN model be trained to estimate depth using similar monocular depth cues? It turns out, Yes they can.

Here is an example where given the image of a kitten, the deep learning model MiDaS is able to generate a per pixel depth map. In order to visualize this depth map, I have turned it into a grayscale image where the darker pixels are further away and the lighter pixels are closer to the camera. Pretty good! You can even make out the depth details of the cobblestones.

I've created a Colab notebook that shows how to use MiDaS's pretrained models to generate depth maps and I also show how to use the JavaScript library, three.js to do a 3D interactive visualization of the depth map right in your browser.

Unlike in image segmentation where the model is trained using images along with associated discrete class labels. Depth estimation models are trained using images and associated depth maps. These depth maps provide the "depth labels" for each pixel during training. The big difference is that these "depth labels" are continuous values rather than categorical class labels. For training purposes the depth data can be obtained by using some additional depth sensing hardware along with a camera. So even though you still need additional equipment to gather the depth labels for supervising the training, the process can be automated and doesn't require labor-intensive manual labeling. After the model has been trained, we no longer need the additional hardware and use the model to infer the depth map using images alone.

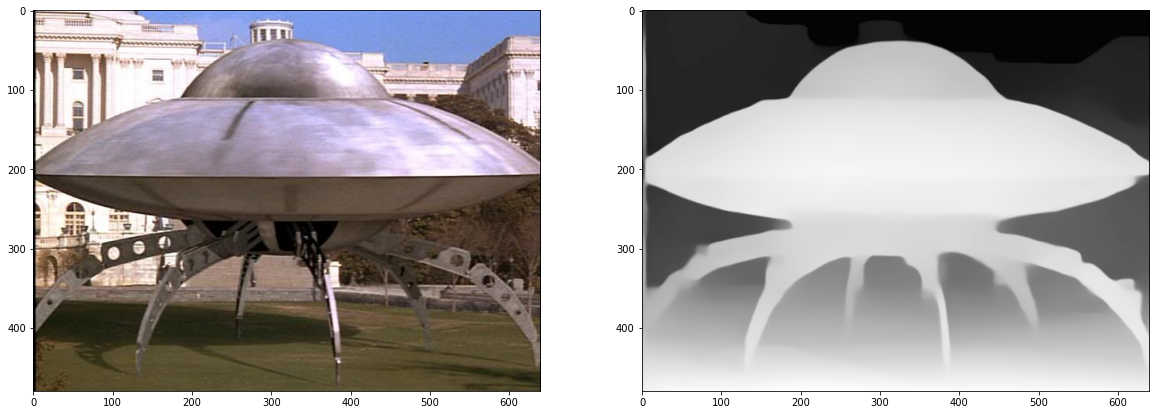

I think one of the most interesting things about depth estimation models is that they are able to map from image cues to some semblance of 3d structure in the natural world. Unlike classification models that can only identify object classes that they have been trained on. Depth estimation models are not trained with respect to class labels and therefore can be applied to images with arbitrary objects. As an example. Midas was never trained on images of UFOs (or at least I'm pretty sure). But Midas is still able to do a pretty good job of depth estimation given an image of a UFO.

Depth estimation models can be a powerful prior for helping to train models that are trying to decode 3d details of the world. For example, they can be used to reduce the number of training samples required for things like Nerf generation

MiDas is not perfect and the paper covers some examples of subtle and not so subtle failcases. And in this article I've made no attempt to make the depth values directly map to any spatial unit of measure. In terms of accuracy there are definitely errors. But I'm still impressed by the ability to recover 3d depth information from an image.

You can read more about the MiDaS model in their paper, Towards Robust Monocular Depth Estimation: Mixing Datasets for Zero-shot Cross-dataset Transfer and the code for training the model can be found in their github repository.

As a side note, it's pretty cool that they were able to use stock ResNET networks as the encoder for the depth estimation network.

Closing Thoughts #

I'm fascinated by the whole topic of perception. About how we can take in a flow of temporal noisy sensor data and form a perception of the 3d world that we live in. The depth model described here only gives us a per pixel "point cloud", there is no object identity or object flow over time. But there is alot of working going on in this area. Going from pixels directly to Radiance Light Fields or to 3D meshes.

Tesla has been pushing hard in this area using only cameras and neural networks to build models that go straight from pixels to vector space representations of lane lines or to voxels.

We have a long way to go to even figure out the "right" way to represent things using the tools that we have. But exciting to see the progress being made and to think about possible applications and ways to converge the different threads of advancement.

Share on Twitter | Discuss on Twitter

John Robinson © 2022-2025